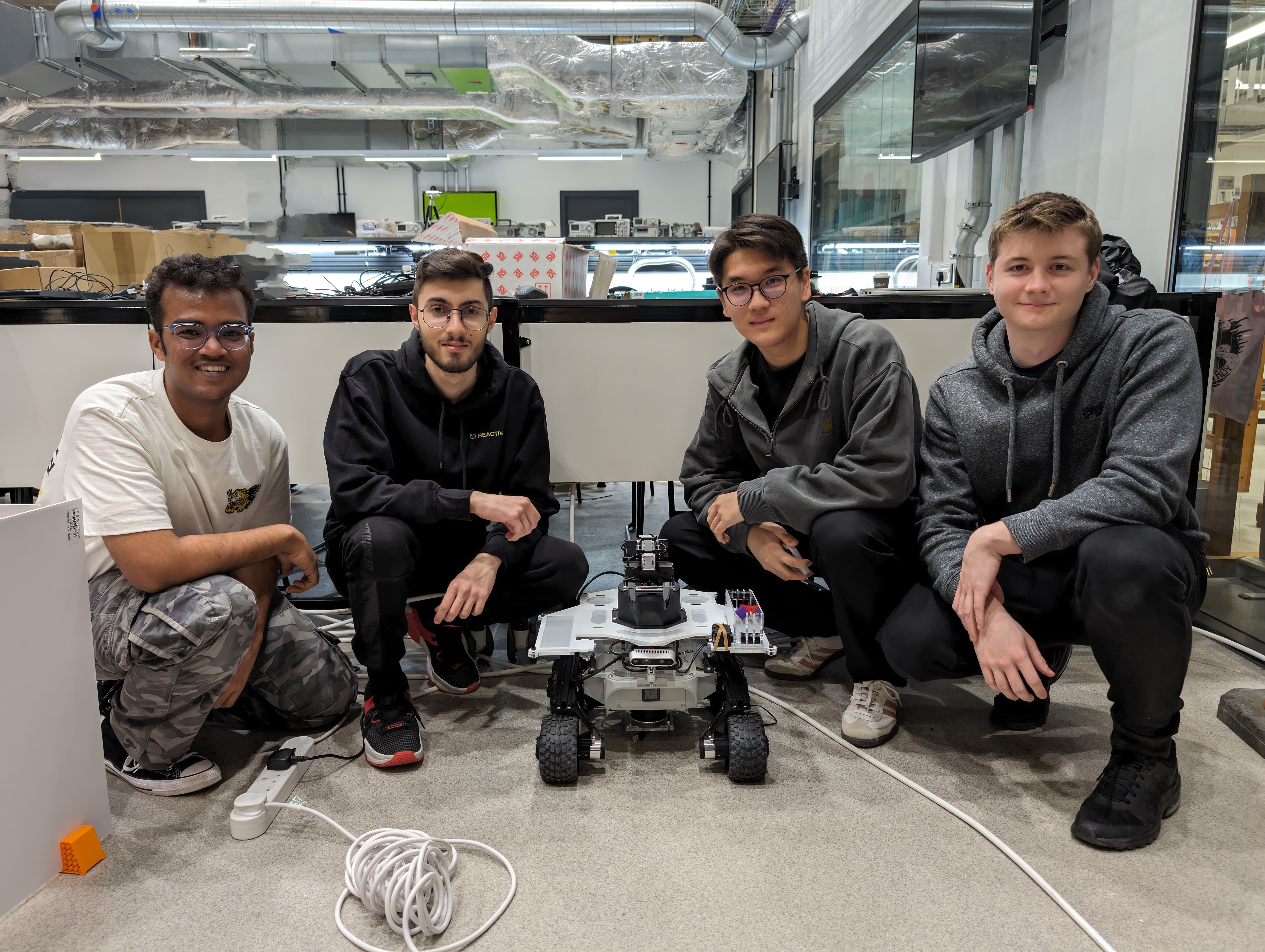

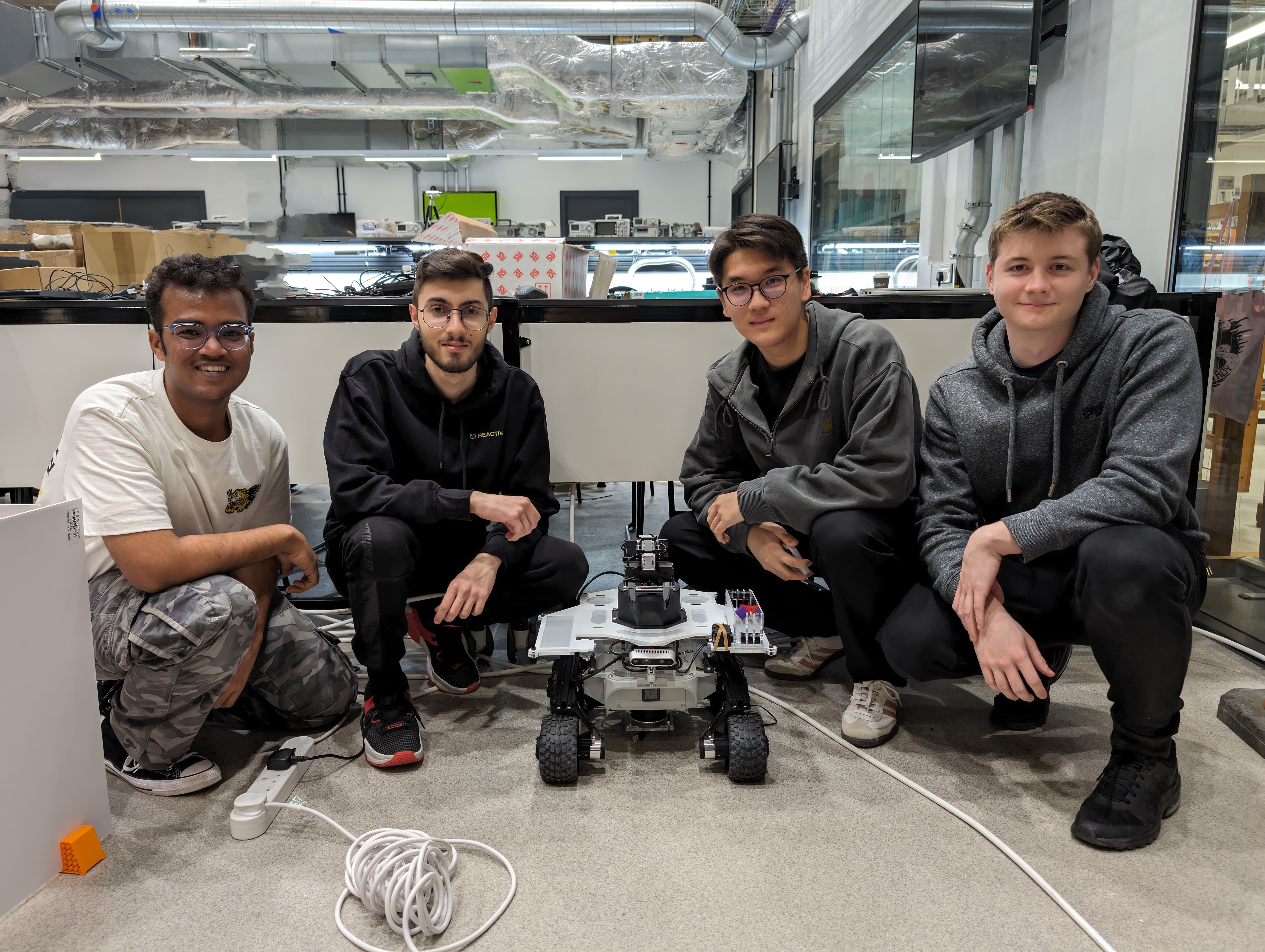

Studying at the University of Manchester. I lead a team of postgraduate engineers to assemble a Leo Rover and a Trossen PincherX 150 Manipulator

that will support each other with ROS2 code to find and retrieve a small wooden cube from an unknown environment, with a high degree of autonomy.

With this project there was four main sections that our team needed to complete.

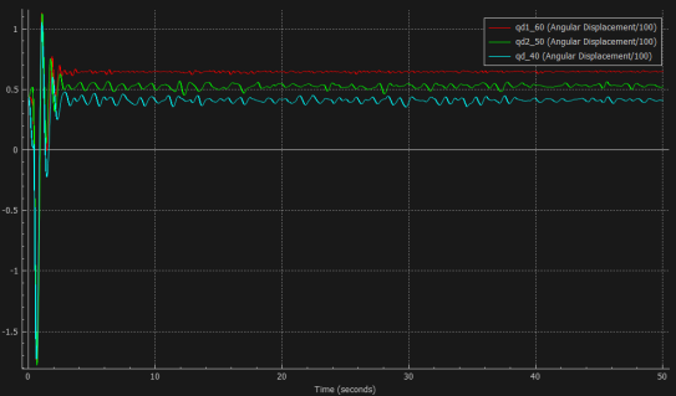

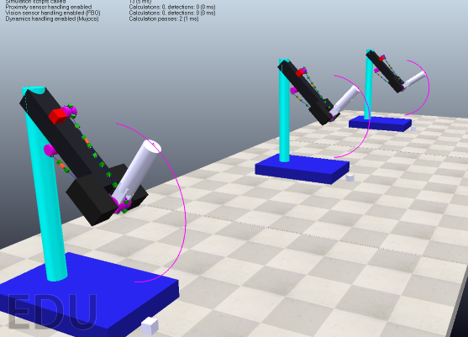

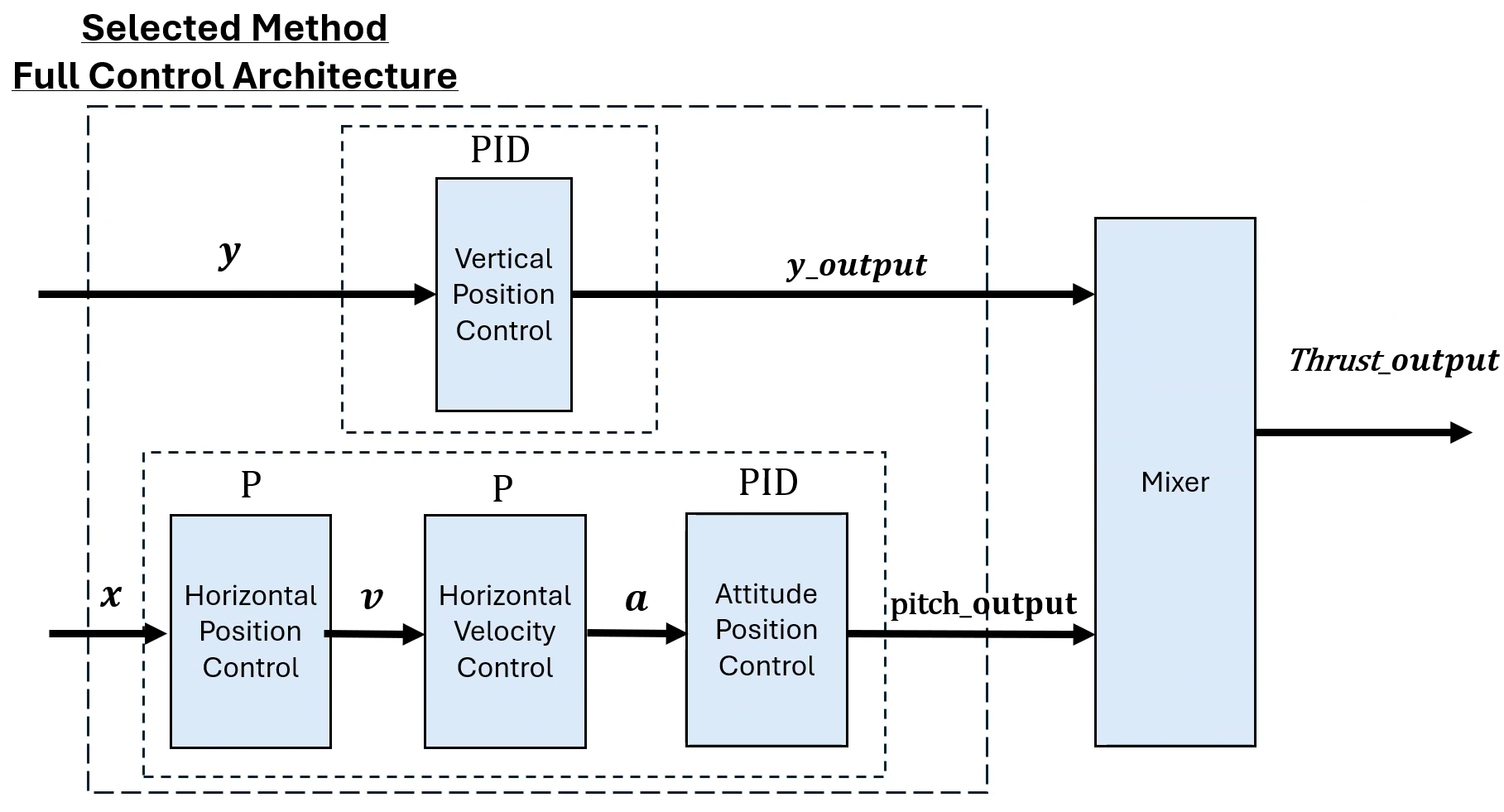

Manipulator Control

We used code to send ROS messages that publish geometric joint states that allow the Trossen Manipulator to use built in libraries to achieve speicifc configurations.

With this set up we could configure the arm to have a set point-to-point path to pick up the objects spotted on the camera.

Design and Construction Control

With this project we was given all the components but had to build up a frame to mount all of the devices and peripherals ontop of the Leo Rover platform.

The robots frame fabrication met all the deisng requirments within the budget set.

Path Planning

The robot was able to map and navigate through an unknown environment, while avoiding nearby obstacles. This was done by using sensor fusion of multiple sensors,

namely the LiDAR, IMU and wheel encoders.

Object Detection and Computer Vision

The object detection utilised a depth camera which published the colour and depth information from the frame. The algorithm implemented was the colour-based

detection algorithm that can accurately identify different cubes placed into the cameras field of view.

The full report can be found here. As well as the video for the design requirements for the project.